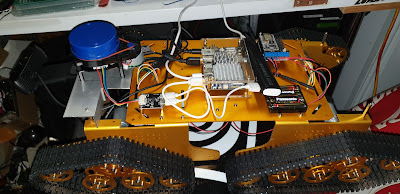

Life with Deep Racer - Part 1

My Deep Racer has finally arrived back in Australia There's a few things I have learned already, and I will share them as I go along. Charging the batteries Power The charger for the compute battery pack simply needs a 3-pin appliance cable to connect it to non-US power outlets. Yes, you can use a plug adapter, but I find they get loose over time. The LiPo charger needs a 9V 0.5A plug pack. The centre pin is positive. There is a considerable power drain on the CPU battery even when the module is off. My advice is disconnect both batteries when not in use, because that big pack takes a long time to charge. Tail light colours Note that these colours might vary depending on the version of software you're running. When I first unpacked it, there were no lights at all. Red - Something is wrong. The usual cause is failure to connect to WiFi. The official documentation lists some troubleshooting tips Blue - Connecting to the ...