DIY Self-Driving - Part 9 - New Imaging

Please Note: Unlike most of my projects which are fully complete before they are published, this project is as much a build diary as anything else. Whilst I am attempting to clearly document the processes, this is not a step-by-step guide, and later articles may well contradict earlier instructions.

If you decide you want to duplicate my build, please read through all the relevant articles before you start.

We have a lot of work to do in pretty short space of time now, with new competitive opportunities in the very near future.

To be honest, the line detection has simply not been good enough, and this is leading to some of the poor performance I have been experiencing with the car. Whilst it really does work, it is not accurate enough. There are a couple of issues here which need to be dealt with:

- The core processor is just not powerful enough; and

- The optics aren't good enough

Processing

As I noted in my "Next Iteration" article I am looking at other processor boards. At the moment, I am experimenting with four different units

- Intel NUC;

- HardKernel ODROID XU4;

- FriendlyARM NanoPC T4;

- Up! UpBoard;

These are being benchmarked against the RaspberryPi 3B from the current platform on a number of criteria:

- Overall Performance;

- Power Efficiency;

- Ease of Integration

Performance and Integration are key functions for me. OpenCV is pretty demanding, and when we start adding some of the learning-based functionality, there are going to be a lot of demands on the system. Integration means we need to be able to interface the new processor platform with the rest of the car, so it needs to have well-documented GPIO capabilities. More news on this as it comes to hand.

Imaging

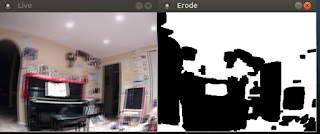

I am also working with a pretty amazing new camera unit manufactured by ELP. This module includes two physical camera and an onboard CPU (ARM, but the specs are unclear) which stitches the two images together. It is intended for use in VR appliances and similar roles, but I like it because if we split the image into it it's components, and then mathematically add the images, we get a single image with depth information:

(This image was built at 25 fps using the ODROID XU4)

It is very reminiscent of those old red/blue "3D" posters. The further away the objects, the more aligned the images are. The camera is also much faster than the older single-lens ELP device previously attached to the car.

Clearly, just adding the two images together is not the end of the story, and I am looking very closely at the OpenCV v.3 Stereo Image functions to extract the depth information. I can then massage this into something the navigation layer can use.

The other option is to use the images in a "Stitch" mode and create a single wide image with less fish-eye effect than I would get with a wide-angle lens.

In any case, it's been a long night, but the car is definitely getting smarter!

Want to help?

If you would like to make a financial contribution to the project, please go to my Patreon Page at:

Shameless Plug:

I use PCBWAY.com for my printed circuit boards. If you need PCB manufacturing, consider the sign-up link below. You get the service, and I get credits. Potentially a win for both of us!

I use PCBWAY.com for my printed circuit boards. If you need PCB manufacturing, consider the sign-up link below. You get the service, and I get credits. Potentially a win for both of us!

Comments

Post a Comment